Why do magnets repel? Why do we slip on ice?

I really like this interview by Feynman where he is asked about the feeling of attraction and repulsion between two magnets. However, it is hard for him to explain what is going on in a way that will satisfy his audience, without going into a deeper level of explanation that they may not understand.

This sparks off a really interesting conversation about what level of explanation we are satisfied with.

But what has this got to do with Human Factors?

Quite a lot, I think. I’ll explain below.

Why do incidents happen?

Attributing human error as the cause for an incident or accident is the second most frustrating thing in the world for Human Factors folk. It misses all the interesting details of what may be going on, and what to do about it.

It’s like saying we slip on ice because it is slippery.

We learn from the Feynman interview that people generally don’t have the deeper framework to be dissatisfied with attributing human error as a cause. They don’t have that deeper level of understanding and the concepts to want to explain it better, deeper, to explore why that deviation happened.

- Was it a slip of attention, a lapse, a mistake or some for of non-compliance?

- What performance influencing factors increased or decreased the likelihood of error?

- How was the interface designed?

- What was the context?

- What were the circumstances?

- Were procedures and protocols being followed and why not?

- Etc.

- Etc.

Human Error as an explanation

Promoting the agenda for alternative explanations of these deviations in performance some have taken to try to avoid the term ‘human error’ altogether. The reason being is that it draws people back to attributing blame to the ‘human’ involved, rather than looking at the systemic factors and interactions that have contributed to the event. It also treats the ‘error’ as an unusual event, different in type normal work, but part of their argument is that these events are part of the normal variability in the system.

One of the downsides to the strict avoidance of using the term ‘human error’ is we lose that bridge: a concept that people are familiar with which can then be explained at a deeper level. It is an area of common ground, which can be advantageous.

Some people say that you don’t have a human error problem, because they don’t exist.

What you actually have is a system deviation problem (or similar) – but this can sound like semantics and word trickery.

Perhaps more fundamentally, and related, is we have an explanation problem. Human error satisfies those who are starting out in this area, but really we need to go deeper.

I think I first came across this in sort of argument in one of Nancy Leveson’s books, where she said that ‘human error’ should go the same way as phlogiston. To save you looking it up, as I had to, phlogiston was thought to be a fire-like element inside all combustible bodies (in the 18th century), so it was how people explained the behaviour of fire, which we now know to be wrong.

Human Factors frameworks for understanding incidents

Feynman talks about needing a different framework of understanding to couch an explanation in. Human Factors as a discipline offers a framework for this, e.g. to resist blaming individuals, to understand the context, the psychology of what was going on at the time, the sociotechnical interactions and broader system influences that have led to the deviation.

Some frame this as moving on from the first story (which is about who has done what to contribute to the incident), to the second story (which is much more about why it happened).

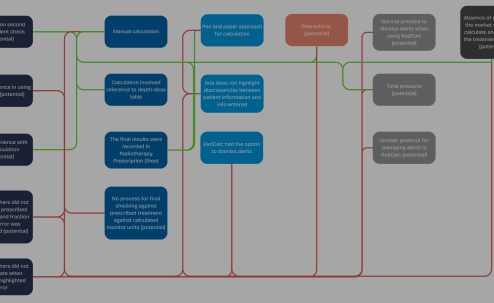

When thinking about incidents in particular there are more specific methods and accident models that emphasise certain elements and relationships and de-emphasise others. For example, Accimaps will draw attention to the latent conditions that influenced what has happened, whereas something like TABIE might draw attention to the potential failures for individual task steps.

These different frameworks get us to commit to certain details and types of explanation.

This may even start by getting us to focus on the specifics of the accident involved, versus looking at how normal work goes right and deviations from this.

Human Factors for understanding performance at work

Our attention doesn’t have to be just focused on incidents in hindsight.

Indeed, it can be beneficial to invest in tasks, quality and safety before anything goes wrong.

Rather than viewing people as the sole determinants of their performance, we can review the conditions in which they work, what makes their work easier, what they have to put up with and what can be improved.

Just recently we’ve been asked to look at how people charge material to a reactor. Like the initial question about the repelling magnets this can at first seem like a simple question, but from a Human Factors perspective, informed by systems thinking, this has different layers of complexity.

I likened this to a research project I worked on at UCL, which looked at charging material to patients (actually infusing patients with fluids and medicine). The simple explanation is that you need the right medicine, at the right place, at the right time, infuse in the right location, at the right rate. You might include a double check of everything for good measure (because we know people are fallible).

But… double check everything…? What does everything mean? Nurses generally don’t have time to go round in pairs, checking even a saline flush when inserting a cannular. So what do they check and when, and to what level of detail?

What does double check even mean? Is this a side by side check, or is it fully independent?

It is possible that sometimes two checks is worse than one, as both parties believe that the other person has checked and so neither does it properly.

The potential deviations and contributory factors can include all manner of things at a deeper level of explanation, e.g.

- poor documentation,

- supply chain issues so procurement by a medicine in different packaging,

- poor stock management so a medicine is in the wrong place when selected,

- a mistake in the prescription that is implemented by the nurse,

- poor interface design if an error on the infusion pump is not detected or perhaps a drop-down menu on an electronic prescribing system leads to a miss-selection.

All of these system issues are potentially part of the story and a deeper level of explanation. These vulnerabilities can be examined before anything has gone wrong, and strengths and good practices can be recognised and amplified, to make work easier and safer.

What informs your framework for understanding human performance?

Quite often we don’t know our own framework, like a fish is unaware of the water it is swimming in.

If you use an incident analysis tool and it has little more than a category ‘human failure’ for recognising the human contribution to an incident…

Or if the only recommendations you give are to retrain and update the procedure…

Then maybe it is too shallow for understanding human performance at work, and maybe you’d benefit from something deeper.

Go deeper into how we understand human error in everyday life and in industrial settings.

Read more about how we analyse tasks using Hierarchical Task Analysis (HTA), and find out more about how Critical Task Analysis by doing our FREE 30 minute mini-course.